Contracts, agreements and other legal documents might seem, at a glance, to be pretty complicated and often hard to parse. After all, the language of the law has to be precise and encompass a lot of situations, so in general it’s almost always best to just get a lawyer. But they can be pretty expensive, so some folks take matters into their own hands.

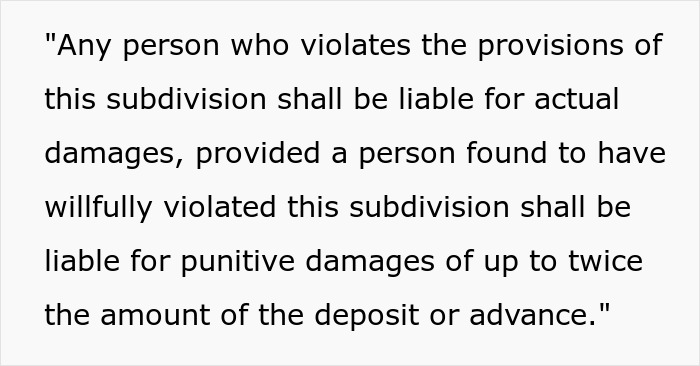

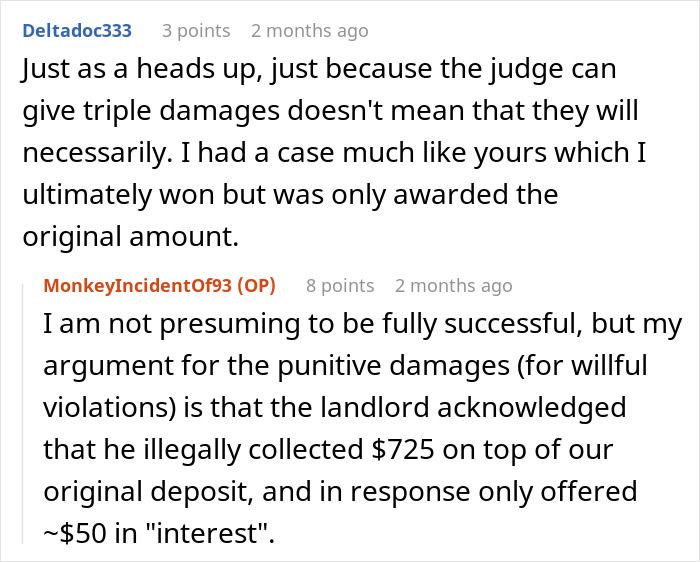

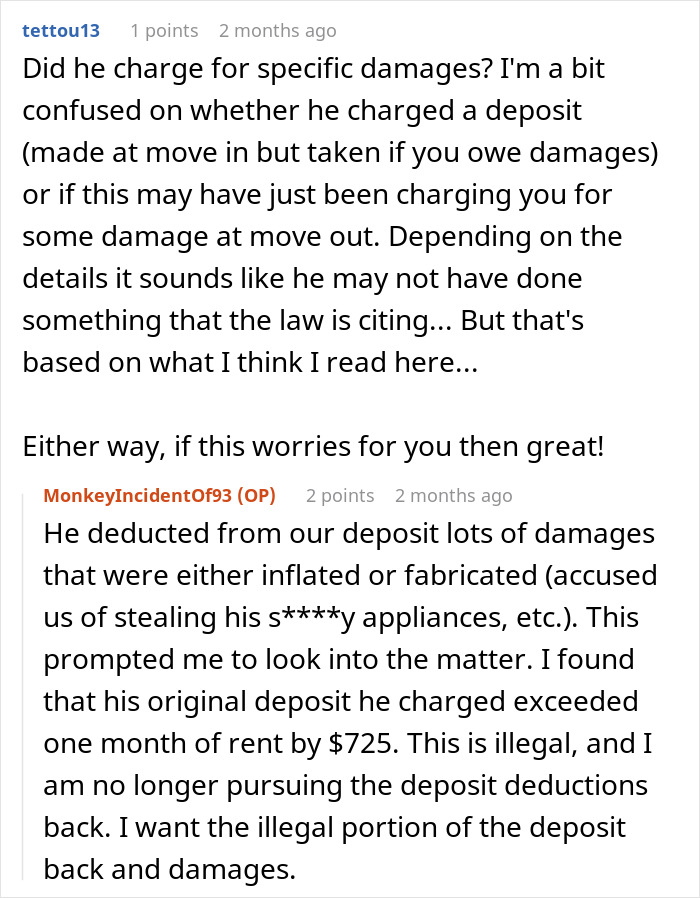

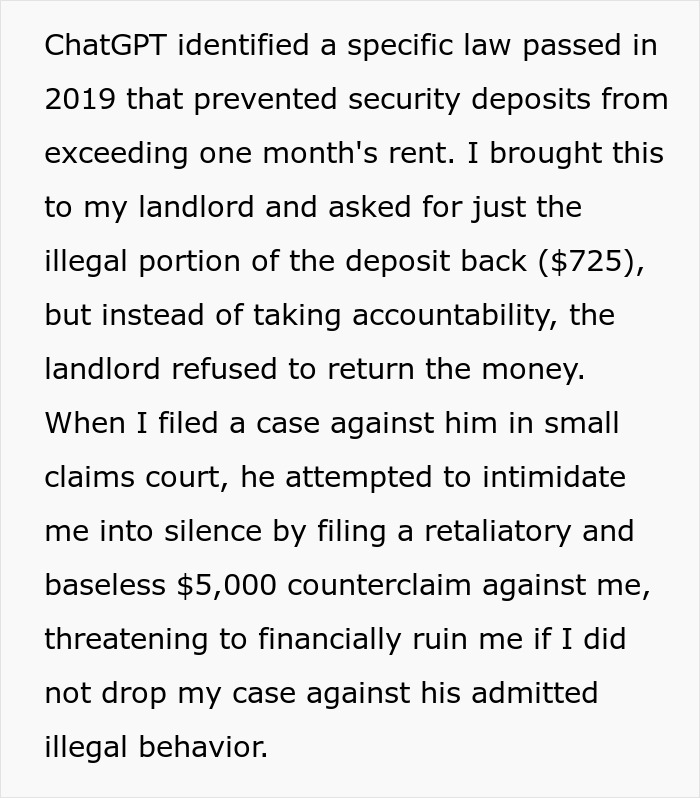

A tenant shared how they used AI to analyze their rental agreement so as to sue their landlord for a fraudulent deposit deduction. They later shared an update about the court’s decision. We reached out to them via private message and will update the article when they get back to us.

Some landlords do try to fraudulently take people’s money

Image credits: frimufilms (not the actual image)

But one netizen decided to use AI to help them fight an unlawful deduction

Image credits: freepik (not the actual image)

They gave a few more details

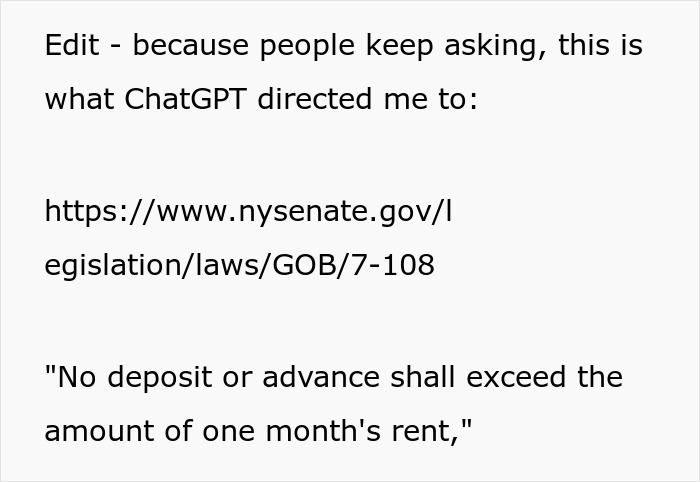

Image credits: MonkeyIncidentOf93

AI tools do have a number of drawbacks

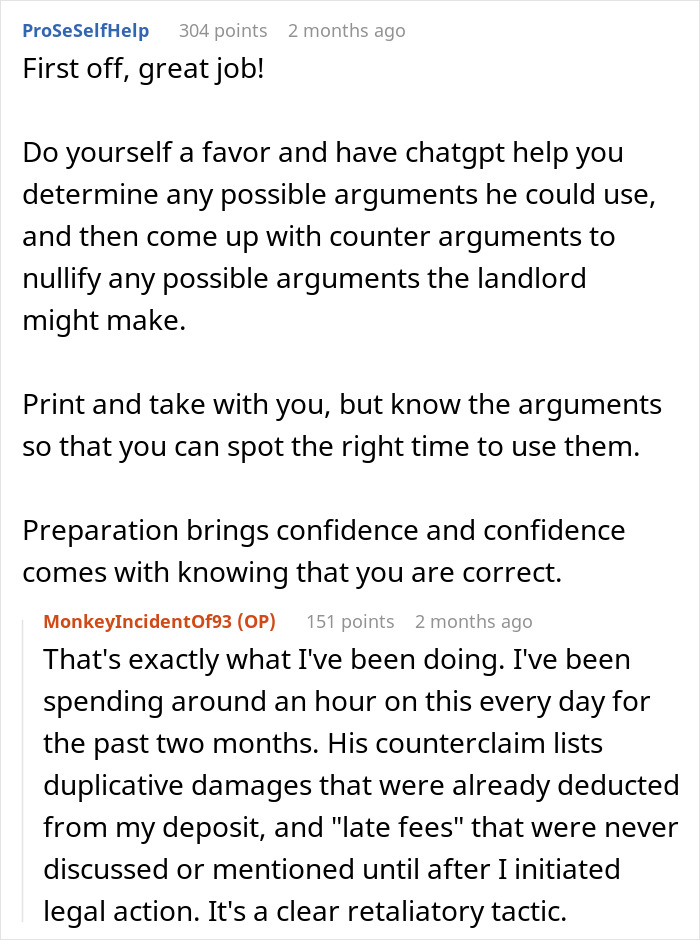

This netizen has struck upon a very useful part of any large language model (LLM), the ability to look at a large piece of text and parse the useful bits. This applies to both the rental agreement as well as local laws, because tracking down the necessary legal rules can be quite the ordeal if you don’t know what you’re doing.

However, as many commenters note, the AI, ChatGPT in this particular case, doesn’t exactly replace your lawyer, it really does the job of a paralegal, while the tenant still has to double check everything. As they themselves claim, it’s probably a lot safer to just get a lawyer, but paying $20 for ChatGPT is definitely cheaper.

Double checking it’s “work” isn’t just recommended, it’s absolutely necessary. Remember, despite being labeled as artificial “intelligence,” these are, at best, very proficient chatbots. They do not know anything and simply summarize the billions of words of text found in whatever their data set is.

This text, in turn, is taken from the internet, which means it will, inevitably, contain falsehoods. After all, humans make mistakes all the time, which doesn’t stop folks from being “confidently incorrect.” Similarly, people can often hold outdated views or misremember things, which, again, does not stop them from confidently writing them down.

The risk is that a LLM will begin to amplify a mistake or misunderstanding by repeating it over and over again. This is called an algorithmic bias, where an LLM prioritizes some information simply because it is more common. The easiest example is the fact that most text online is in English, thereby skewing most of an LLM’s output towards Anglo-centric viewpoints.

Image credits: Solen Feyissa (not the actual image)

But LLMs can be very useful tools in the right hands

Setting that aside, this post does show just how useful a LLM can be at breaking down tasks that might seem complicated. After all, most places have rigorous protections for tenants, but they tend to be buried in legal codes most folks do not have the time, skill or energy to dig through.

Indeed, the real takeaway from this is that most of us have probably been somehow defrauded by a landlord or perhaps employer just because we didn’t know the exact law or how to take any action against them. Having a tool that can answer questions or at least point you in the right direction can be absolutely invaluable.

Double and triple checking the information is still very important. Some companies have already put AI “agents” in place, much to the chagrin of folks who need to speak to a customer support representative. In extreme cases, they even cause very serious issues, for example, an AI-powered recipe bot for a New Zealand grocery store suggested ingredients for an “aromatic water mix,” which mixed bleach and ammonia. The actual fumes from this mix can kill you.

Bored Panda has even covered similar stories, of a mushroom identification book that was actually AI written, which led a family to be hospitalized after eating supposedly “safe” mushrooms. So if you have an annoying landlord, it can be useful to perhaps do your research, but always, always, always verify.

Image credits: Vitaly Gariev (not the actual image)

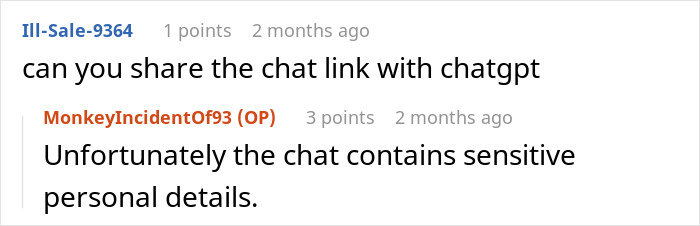

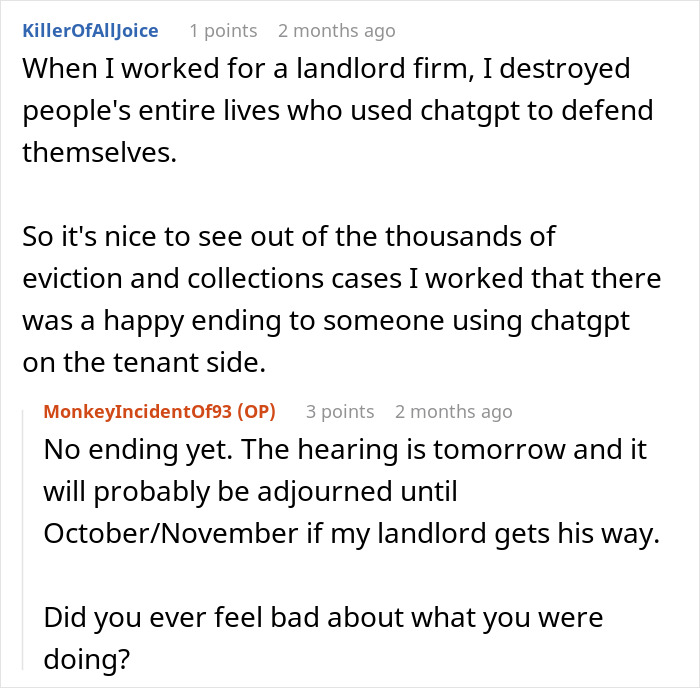

The tenant also chatted with some commenters

Later, they shared how they did in court

Image credits: Wavebreak Media (not the actual image)

Image credits: rawpixel.com (not the actual image)

Image credits: Solen Feyissa (not the actual image)

Image credits: kuprevich (not the actual image)

Image credits: MonkeyIncidentOf93

People applauded their plan

Poll Question

Thanks! Check out the results:

Who, apparently, really likes to try to sue people: https://www.counsel.nysed.gov/Decisions/volume62/d18195

Load More Replies...My experience with AI chat - not chatgpt though - is not great, and I often have to correct it. I tried the 6 degrees of separation from Kevin Bacon thing, and it ended up directing me to a film that he was apparently in in 1946. Double check everything it tells you!

Lots of examples of ChatGPT having "hallucinations" and citing completely fake links, statutes, and cases.

Load More Replies...Who, apparently, really likes to try to sue people: https://www.counsel.nysed.gov/Decisions/volume62/d18195

Load More Replies...My experience with AI chat - not chatgpt though - is not great, and I often have to correct it. I tried the 6 degrees of separation from Kevin Bacon thing, and it ended up directing me to a film that he was apparently in in 1946. Double check everything it tells you!

Lots of examples of ChatGPT having "hallucinations" and citing completely fake links, statutes, and cases.

Load More Replies...

Dark Mode

Dark Mode

No fees, cancel anytime

No fees, cancel anytime

38

9