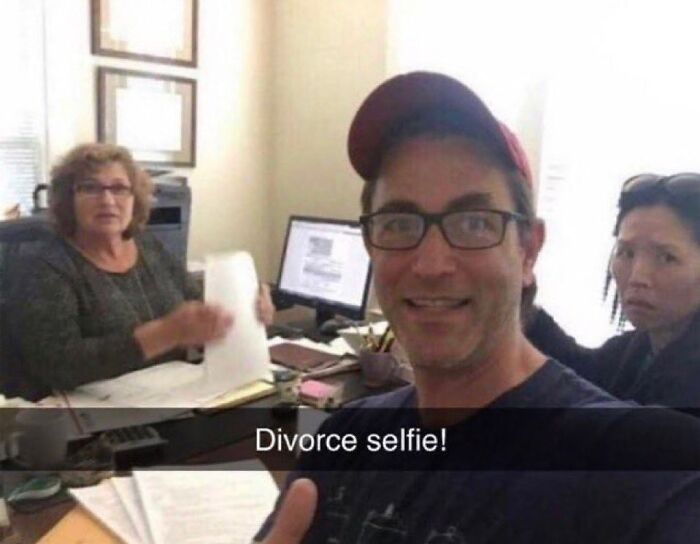

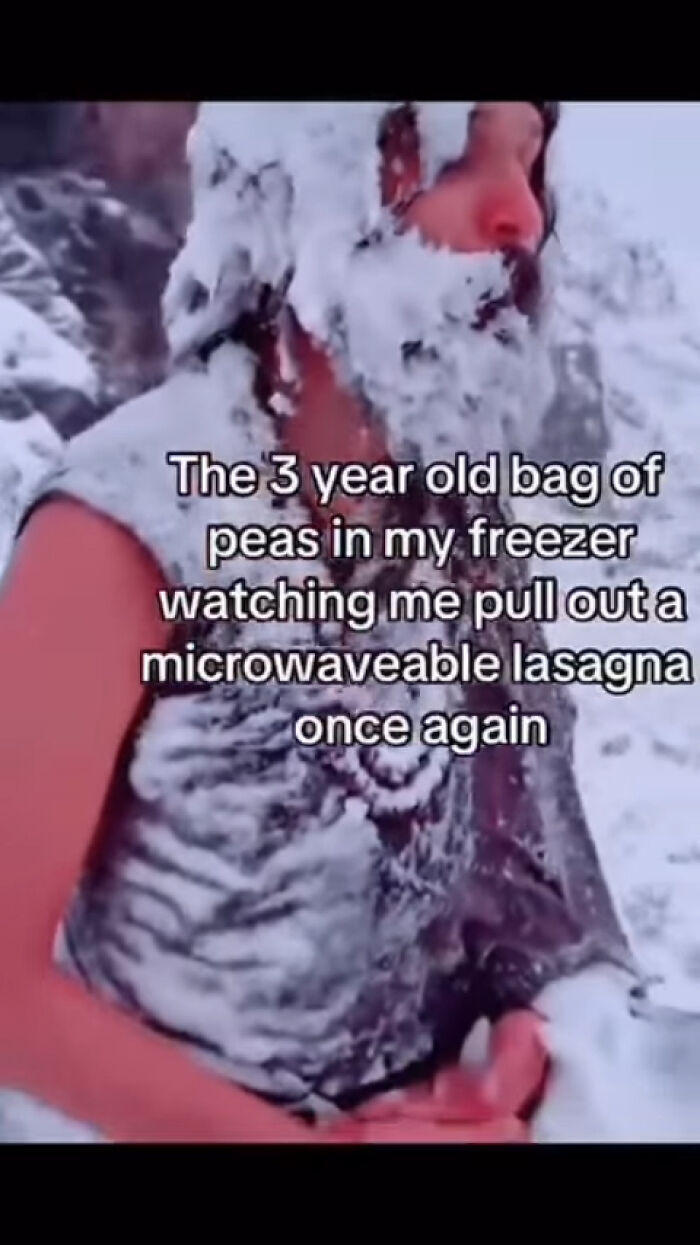

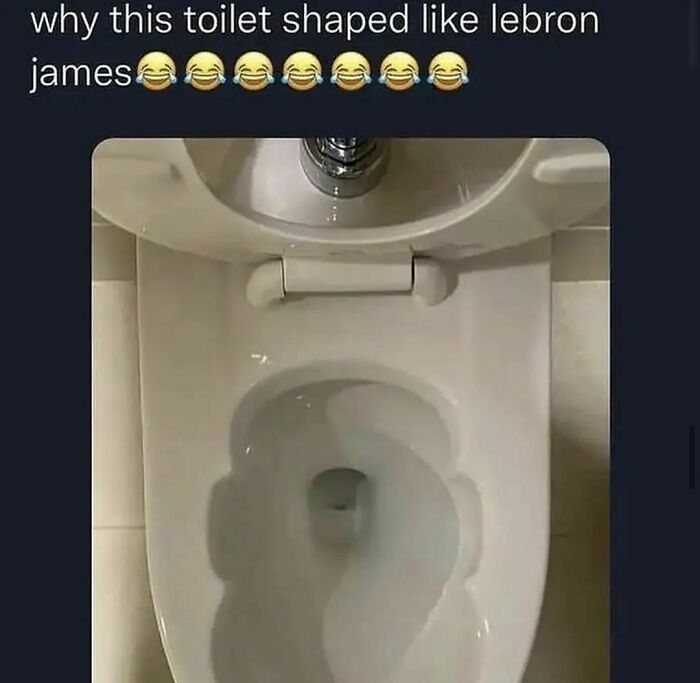

This Instagram Account Posts Hilariously Chaotic Pictures, And These 50 Are Just Too Much

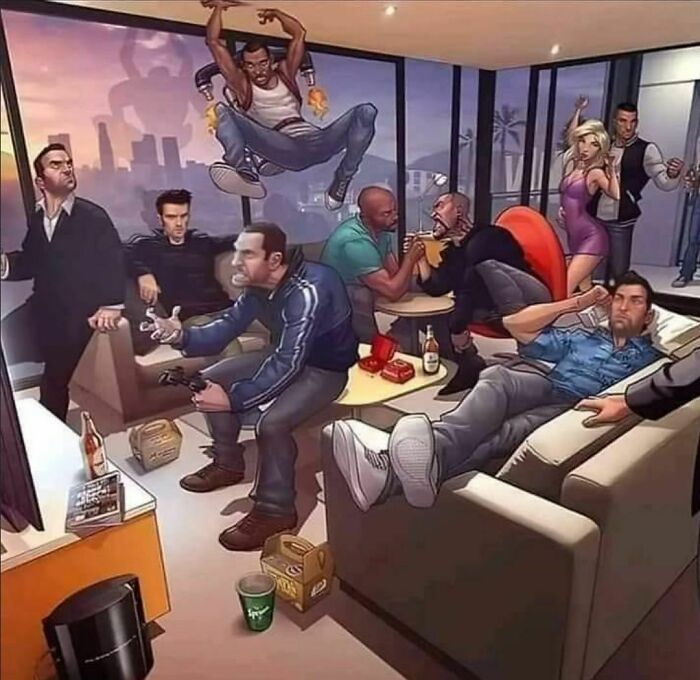

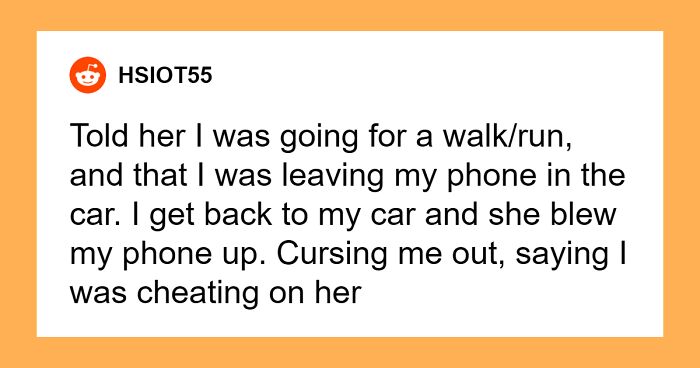

In the past few years, online content has undergone an intense transformation, and the main culprit is artificial intelligence (AI). Its presence is seen in the news, photo editing, video production, you name it. However, as the internet is embracing this new technology, some purists reject it altogether. And the Instagram account 'Things A.I. Could Never Recreate' is one of them. This fun social media project showcases unique footage that captures what it's like to be human. Whether we're talking about once-in-a-lifetime coincidences, unfortunate fails, or good old irony, it's all there.

More info: Instagram

This post may include affiliate links.

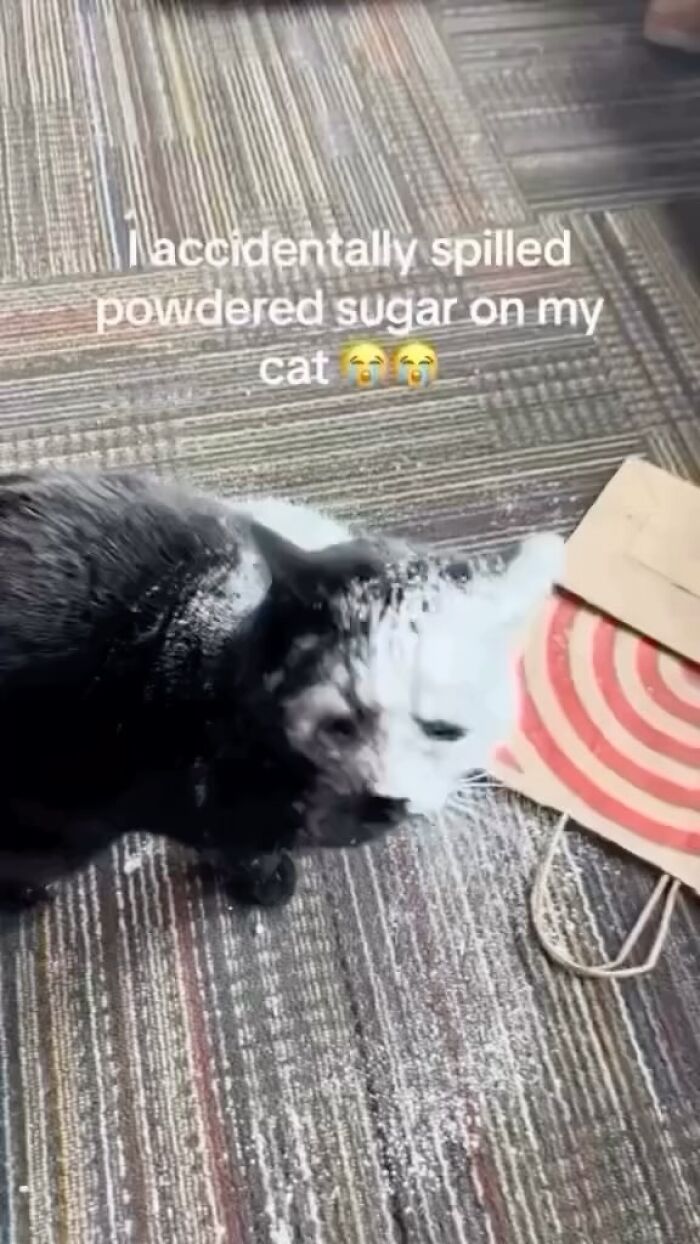

If that cat isn’t the platoon leader then there’s something wrong in the world.

Like many new technologies, generative AI has been said to follow a path known as the Gartner hype cycle.

This widely used model describes a process in which the initial success of a technology leads to inflated public expectations that ultimately fail to materialize.

After the early “peak of inflated expectations” comes a “trough of disillusionment,” followed by a “slope of enlightenment” and finally, a “plateau of productivity.”

A Gartner report published in June listed most generative AI technologies as either at the peak of inflated expectations or still heading towards it.

The paper argued that most of these technologies are two to five years away from becoming fully productive.

Many compelling prototypes of generative AI products have been developed, but adopting them in practice has been less successful.

A study released last month by American think tank RAND suggested that 80% of AI projects fail, more than double the rate for non-AI projects.

"The RAND report lists many difficulties with generative AI, ranging from high investment requirements in data and AI infrastructure to a lack of needed human talent. However, the unusual nature of GenAI’s limitations represents a critical challenge," said Dr. Vitomir Kovanovic, an associate professor at the University of South Australia's Education Futures and Associate Director of its Centre for Change and Complexity in Learning (C3L), a research center studying the interplay between human and artificial cognition.

"For example, generative AI systems can solve some highly complex university admission tests yet fail very simple tasks. This makes it very hard to judge the potential of these technologies, which leads to false confidence."

Indeed, a recent study showed that the abilities of large language models such as GPT-4 do not always match what people expect of them. In fact, even capable models severely underperformed in high-stakes cases where incorrect responses could be catastrophic.

These findings suggest that these models can induce false confidence in their users. "Because they fluently answer questions, humans can reach overoptimistic conclusions about their capabilities and deploy the models in situations they are not suited for," Kovanovic explained.

"Experience from successful projects shows it is tough to make a generative model follow instructions. For example, Khan Academy’s Khanmigo tutoring system often revealed the correct answers to questions despite being instructed not to."

So what comes next? Kovanovic believes that as the AI hype begins to deflate and we move through the period of disillusionment, we should see more realistic AI adoption strategies.

For example, a recent survey of American companies discovered they are mainly using AI to improve efficiency (49%), reduce labor costs (47%), and enhance the quality of products (58%). So there's a good chance that we’ll witness a more grounded integration of AI in various industries moving forward.

Secondly, we also see a rise in smaller (and cheaper) generative AI models, trained on specific data and deployed locally to reduce costs and optimise efficiency.

Even OpenAI, which has led the race for large models, has released the GPT-4o Mini version to reduce costs and improve performance.

And finally, we see a strong focus on providing AI literacy training and educating the workforce on how AI works, its potentials and limitations, and best practices for ethical AI use. Kovanovic believes we are likely to have to learn (and re-learn) how to use different AI technologies for years to come.

So in the end, the AI revolution might look more like an evolution, and as this Instagram account suggests, there might be some things that it will never manage to recreate.

My sister is crazier. She eats lemons the same way you eat oranges!

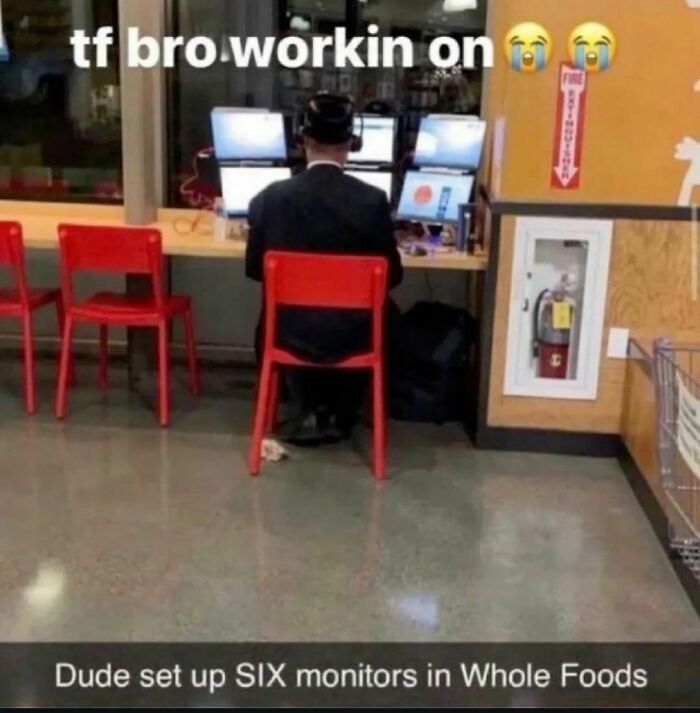

And set up next to the fire extinguisher. Dude knows he's overloading the circuit.

Don't know how ethical it is to put tape on shell, since I read they have touch sensors on the shel. Maybe not the correct term, but it's 5 in morning and can't go back to sleep..

Maybe he likes to fly a jet fighter because passenger planes are boring.

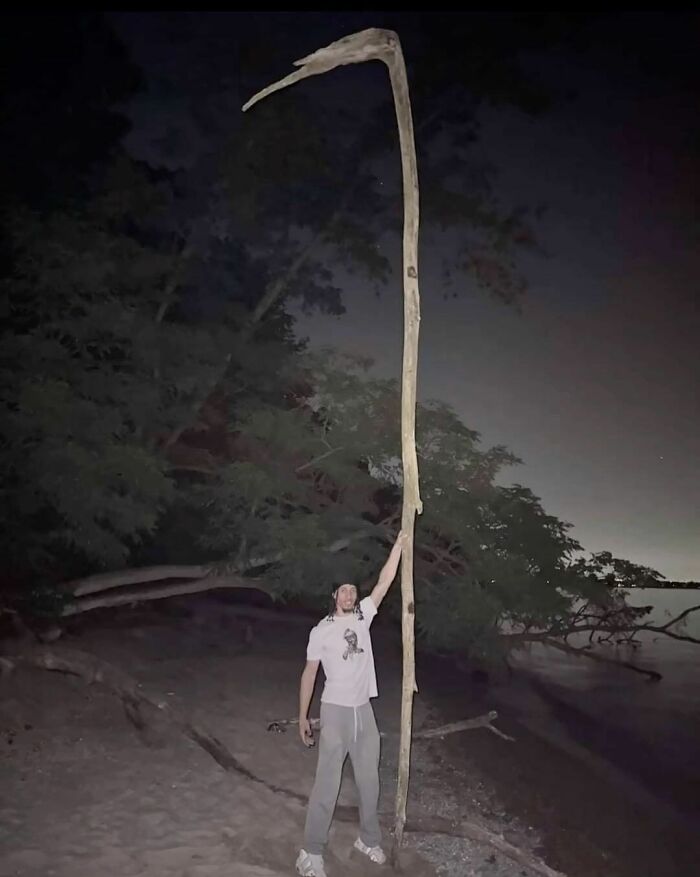

I also think I’m alone but then the shadow man makes his appearance at night

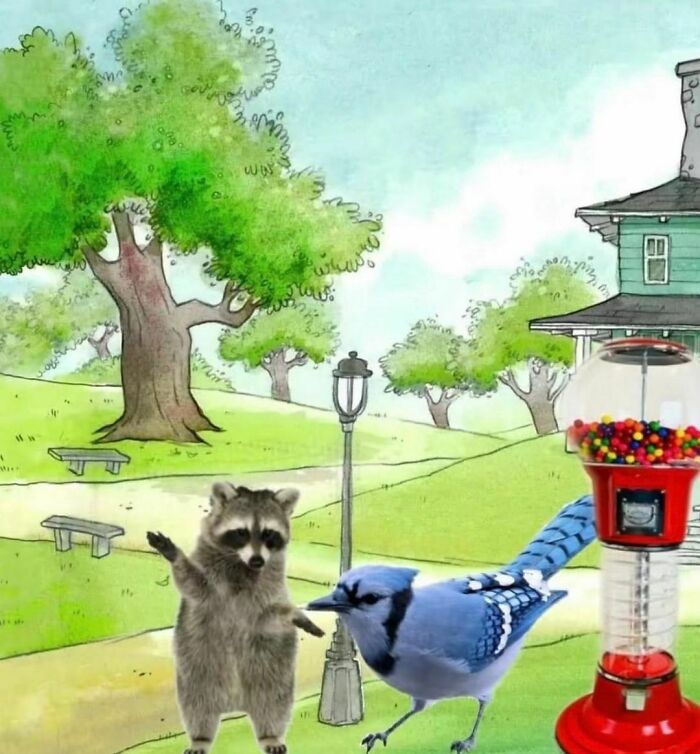

I think Threatening Spongebob is just to keep you from seeing the bird person in the back. I am afeared.

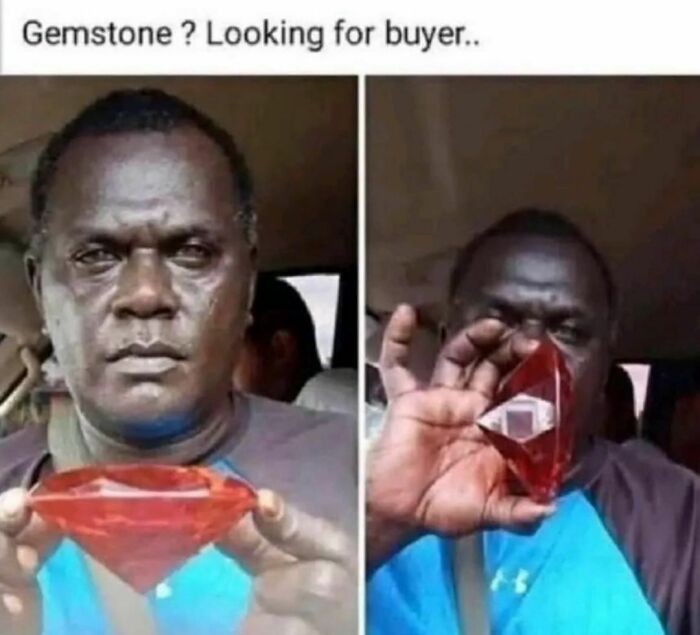

At first glace I thought they were grabbing a swarm of bees.

With a bold eagle in the background? Including the fake majestic scream, or the seagull-lile one? 🤔

Dark Mode

Dark Mode

No fees, cancel anytime

No fees, cancel anytime